I’m to the point now where my little home device has enough services and such that bookmarking them all as http://nas-address:port is annoying me. I’ve got 3 docker stacks going on (I think) and 2 networks on my Synology. What’s the best or easiest way to be able to reach them by e.g. http://pi-hole and such?

I’m running all on a Synology 920+ behind a modem/router from my ISP so everything is on 192.168.1.0/24 subnet, and I’ve got Tailscale on it with it as an exit node if that helps.

You’ll want a reverse proxy like Traefik, Caddy, or nginx in order to get everything onto 80 or 443, and you’ll want to use your pihole to point domains/subdomains to your NAS.

To add to that… If OP owns a domain, they could issue an SSL cert for a subsain, like lab.example.com and point the A record to the (hopefully static) IP if the router, and port forward 443 to pihole

Or if OP doesnt own a domain they could just use any custom word like jellyfin.op

Also having nice homepage is usefull. I prefer homepage

Or just a dynamics dns service like duckdns. Point a CNAME at your duckdns name. Or better still, a cron running locally and updating cloudflare dns etc. Lots of better options for home hosting than hoping your ip stays static.

By hopefully… I actually meant that OP might have a static IP already.

Sorry I read “hopefully” as an imperative. At least in the US static home IPs are very rare so I generally assume some form of DDNS will be needed for any home hosting solution

Wher I live they are rare too. They used to be more common back in the days, but now they’re mostly offered to business customers.

But you’re right… the “hopefully” could’ve been easily misinterpreted as in “hoping the IP doesn’t change anytime soon, or ever”

Sorry for the silly questions but I’m new to this and still learning how this stuff works. Is there a guide for noobs to do this that you’re aware of? I own a domain and I’m trying to do exactly this.

Also, would you recommend traefik over nginx? I am told that if I want to use the skills in a professional environment I should learn nginx but I’ve read it doesn’t have an interface and the configuration is manual.

I’ve got pterodactyl running some game servers locally I’d like to open to my friends and this should be a secure way to do this.

I also read below I should use a DNS if I don’t have a static IP. Does that throw a wrench in all this?

I don’t know of any beginner tutorial, since I learned it along the way.

But in a nutshell. Most webservers (reverse proxies) are manual. nginx, caddy, traefik. However, there’s nginx proxy manager, which is a web gui.

Regarding DNS, you need DNS regardless of fixed IP what you probably mean is dynDNS (dynamic dns) which you’ll definitely need if your IP changes.

I use nginx proxy manager to reach all my services via servicename.domain.com for example.

https://nginxproxymanager.com/

Nginx proxy manager is really simple to use. Again it runs as a container and uses let’s encrypt certificates.

Ugh. I really gotta switch to this. I started out by using Apache because that’s what I use for work, and just what I know. I create the configs and get the certificates from Let’s Encrypt manually. But now I have so many services that switching to something else feels daunting. But it’s kind of a pain in the ass every time I add something new.

Other than writing an entry in my docker-compose.yml that was all the configuration required. The rest is in the GUI and it’s super simple.

Oh, I don’t have a GUI for my server. But I’m sure they have a command line interface for it, right?

I mean nginx proxy manager is managed by a GUI/web interface.

Oh right a web interface. That makes more sense. 😅

Yeah, I really do need to get around to setting that up…

get the certificates from Let’s Encrypt manually

https://httpd.apache.org/docs/2.4/mod/mod_md.html just add

MDomain myapp.example.orgto your config and it will generate Let’ Encrypt certs automaticallyit’s kind of a pain in the ass every time I add something new.

You will have to do some reverse proxy configuration every time you add a new app, regardless of the method (RP management GUIs are just fancy GUIs on top of the config file, “auto-discovery” solutions link traefik/caddy require you to add your RP config as docker labels). The way I deal with it, is having a basic RP config template for new applications [1]. Most of the time

ProxyPass/ProxyPassReverseis enough, unless the app documentation says otherwise.

Maybe a dashboard might help? Gethomepage.dev works really well for me.

You need a reverse proxy. That’s what they do.

The next thing is fully subjective, but I would not recommend Nginx Proxy Manager. It has a neat GUI, but in my setups it has been failing often times, especially if used in public servers with letsencrypt certificates.

Maybe I fucked up something, but I can really recommend caddy instead. It’s configured from a yaml file, but I find that to be much more flexible for custom rules and so on. Also, configuring caddy is stupidly simple, I love it.

Reverse-proxy. Caddy is the easiest to configure, HAProxy has the least “bloat” (subjective opinion but still), NGINX + Proxy manager seems to be popular and very well used. Traefik has a bit of a learning curve but has great features if you have the need for them.

Or just use plain Apache httpd.

I love Traefik! When I started, I tried NGinx, but could not wrap my head around it. So I tried Caddy. Pretty easy to understand andI used it for a while. Then I had demands Caddy could not do ant stumbled uponTraefik. As you said, a learning curve, butfor me much easier than NGinx. I like that you can put the Traefik config inside the Compose files and that the service only is active in Traefik when the actual Containers are up and running. I added Crowdsec to my external facing Traefik instance and even use a plain Traefik instance for all my internal services also. And it can forward http, https, TCP and UDP.

Would be very interested to know which needs were not met with Caddy, and why you didn’t think of HAProxy or Apache

Yeah, nginx is way to overcomplicated if you aren’t familiar with it and using it on a daily basis in a coporate environment.

Traefik is elegant and simple when you get the basics, but lacks serious documentation for more complicated stuff.

Haven’t tried other proxies, but why should I, traefik works great and never had any relevant issues that would make me wanna change !

There’s a few options. Personally I use nginx. You can build a proxy container running nginx, then you can direct traffic to other containers.

I do things like

serviceX.my.domainand that will know to proxy traffic to serviceX. Added benefit is that now you have one ingress to your containers, you don’t need to memorize all of those ports.I know traefik is a thing that other people like

If you want something real simple you could also do Heimdall, which let’s you register your systems you have running, you open Heimdall first and it’ll direct you to what you have running, but that’s essentially just fancy bookmarks

I looked at Heimdall and came to the same conclusion, I could just whip up a static html page of links, or make bookmarks, easier than maintaining another docker.

Yep, tried it and yeah just a fancy page for bookmarks - although it did make a nice home/landing page for me whenever I opened a new tab.

Nginx is your friend then, set up a good proxy and it’ll be much easier to navigate your network.

Everybody is saying a reverse proxy which is correct, but you said docker stacks, so if that means docker compose then the names of your container is also in DNS so you can use that.

Can’t remember if port is needed still or not however.

AFAIK docker-compose only puts the container names in DNS for other containers in the same stack (or in the same configured network, if applicable), not for the host system and not for other systems on the local LAN.

deleted by creator

It might work if you put them on the same Docker network? I use Kubernetes and it definitely has this feature.

In general yes. You can think of each container in a docker network as a host and docker makes these hosts discoverable to each other. Docker also supports some other network types that may not follow this concept if you configure them as such (for example if you force all containers to use the same networking stack as one container (I do this with gluetun so I can run everything in a vpn) all services will be reachable only from the gluetun host instead of individual service hosts).

Furthermore services in a container are not exposed outside of it by default. You must explicitly state when a port in a container is reachable by your host (the ports: option).

But getting back to the question at hand, what you’re looking for is a reverse proxy. It’s a program that accepts requests from multiple requested and forwards them somewhere else. So you connect to the proxy and it can tell based on how you connect (the url) whether to send the request to sonarr or radarr. http://sonarr.localhost and http://radarr.localhost will both route to your proxy and the proxy will pass them to the respective services based on how you configure it. For this you can use nginx, but I’d recommend caddy as it’s what I’m using and it makes setting up things like this such a breeze.

Yes, that’s how it’s supposedto work if they’re all on the same Docker network (same yaml). In practice, it can be flaky and you’re much better off using ip:port.

If you don’t set the network, doesn’t it default to host?

I’m pretty sure it’s available locally… Yes but maybe not via network. So might not be as useful for OP. Correct!

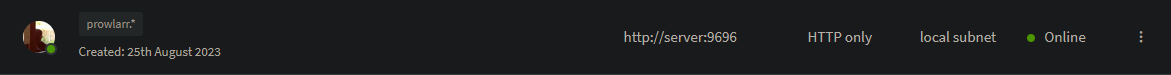

The way I got it set up using Pihole and NginxProxyManager in Unraid:

- Deploy NginxProxyManager using custom: br0 with a separate IP address

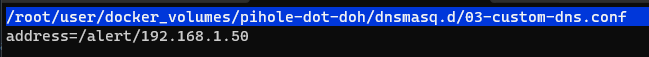

- Pihole can’t do wildcards unless you create

pihole/dnmasq.d/03-custom-dns.confand addaddress=/tld/npm_ip, this way *.tld goes to the stated IP.

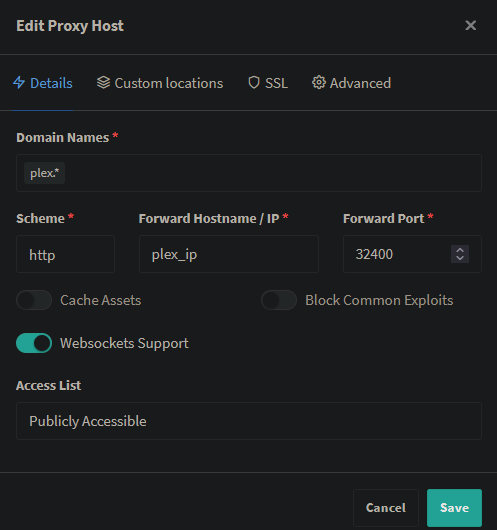

- Now your plex.tld goes to your nginx proxy manager IP, and it needs to handle the subdomain.

NginxProxyManager can do wildcards both ways, so you can create

plex.*to go to plex IP and port. orsonarr.*that goes to your server IP and sonarr port

Please tell me if you need more details.

Thanks to everyone who replied, but I gave up on this. Turns out that Synology’s DSM has nginx as part of it, without exposing it as configurable, that commandeers ports 443 and 5000, and any other port seems to direct to 5001(?) which is the desktop manager login. I’ll just remember all the ports or maybe get Heimdall spun up!

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters DNS Domain Name Service/System HTTP Hypertext Transfer Protocol, the Web IP Internet Protocol NAS Network-Attached Storage SSL Secure Sockets Layer, for transparent encryption TCP Transmission Control Protocol, most often over IP UDP User Datagram Protocol, for real-time communications nginx Popular HTTP server

[Thread #288 for this sub, first seen 18th Nov 2023, 19:15] [FAQ] [Full list] [Contact] [Source code]